Opening up Europe's procurement data

15 May 2014

What is the next European dataset that investigative journalists need to look at? This question was raised at the DataHarvest conference back in 2012. The event brings together technologists and reporters from across the EU’s member states. Brigitte, investigative superstar of FarmSubsidy fame and co-host of the conference, had a clear answer: let’s open up TED (Tenders Electronic Daily), the EU’s shared procurement mechanism. Her suggestion triggered a two-year project, which, as of last week, has finally matured into a useful resource for journalists and researchers.

Established in order to promote a single market for government work, TED collects tender notices for large public projects so that companies from all EU countries can bid on those contracts. For journalists, there are many exciting questions such a database would be able to answer: What major projects are being announced? Who is winning the contracts for these projects, and is that decision made prudently and impartially? Who are the biggest suppliers in a particular country or industry?

Big data journalism?

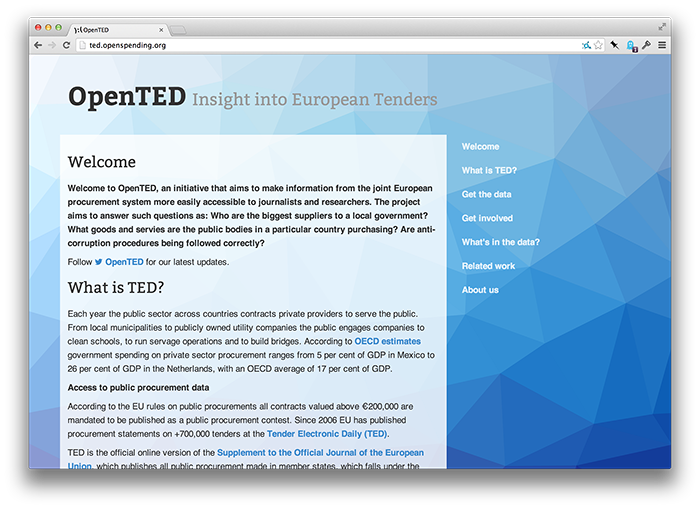

To answer these questions, however, we would need to look at the database directly - not just at the web site provided by the EU Publications Office. The OpenTED project, started by Anders Pedersen and Joost Cassee, was initially born as an attempt to scrape the official TED web site. Downloading over 240 GiB of web pages and converting them to a dataset was no small task, but by DataHarvest 2013, a messy extract was available.

Soon, however, this first version of OpenTED was faced with a number of practical problems: the data was impossible to use for the journalists at last year’s DataHarvest without an interface, and the stuff was so messy that even Sunlight Foundation’s finance data genius Kaitlin Devine couldn’t help us pull apart the errors we’d made while scraping and where the data itself was inconsistent. To make things worse, in June 2013 the EU Publications Office updated the TED web site to make bulk scraping impossible - leaving us without a way to update the data.

Scraping with words

After being locked out, Anders and I decided to take a radical step for a bunch of nerds: talk to the EU. Speaking to the Publication Office’s unit lead, we were surprised to learn that they were already in the process of changing their licensing regime: while access to machine-readable data had been sold to re-users in the past, the plan was to make the data freely available in January 2014. Thanks, Neelie!

So, in early January, I pinged @EUTenders on Twitter, asking what happened to the publication plan. Expecting some sort of rejection, I was surprised to promptly receive a direct message with credentials for their raw data file server. The site offered DVD images for download, with XML dumps of TED’s data since 2011 - this was exactly what we were looking for.

As a test case for the data, I decided to import EU agency contracts into OpenInterests.eu, a web site that tracks links between European institutions and business. Finally, we had a stable workflow for getting a reasonably clean version of the tenders.

Building a community

As DataHarvest 2014 approached, we decided to make an updated version of OpenTED, offering slices of the newly opened data in an accessible format (CSV) and in small portions, divided by country and year, so that journalists without database skills would be able to grab the data and explore it in a spreadsheet application.

At the same time, Twitter’s weird serendipity continued to work in my favour: not only did the Publications Office agree to send representatives to DataHarvest (see their slides), I also ran into SpendNetwork’s Ian Makill, who was about to finish an API for TED data. He’s already done some amazing analysis of tender timelines, based on the TED data. Furthermore, Ada Homolova from DataJLab agreed to present her thesis on using social network analysis to data-mine procurement data for signs of corruption at the conference itself.

So, in many ways, last week’s DataHarvest was a culmination of two years work on EU tenders, finally bringing together EU officials, journalists, data nerds and academics to discuss how the data could serve journalistic investigations.

The resulting discussion focussed on the quality and completeness of the data. Many pieces of essential information are missing - including many contract values and supplier names. Additionally, the existing data is very messy, particularly when it comes to clearly identifying the public body and economic operator involved in a contract.

What will happen next?

In many ways, the next step is up to the journalists who attended DataHarvest. We have, I think, created a rich resource for them to perform investigations with. We’ve set up a network of technologists that are ready to support analysis of the data - and Ian has committed to further clean up the contracts over the following months.

I’m looking forward to seeing how journalists from across Europe will pick this up and tell the story of how our governments conduct their business - who benefits, and if those decisions are made correctly.

Oh, and I’d love to know more about that 700 trillion Euro building they’re constructing in Galway.

Resources

The data & tools:

- OpenTED data archive, the main result of this work.

- The Usual Suppliers - A little analysis application for journalists.

Some code:

- pudo/monnet - the scrapers for TED (and many other EU data sources)

- opented/opented - the CSV export site

- opented/the-usual-suppliers - the analysis mini-app linked above